System configuration:

Hardware configuration

- two virtual machines (VMWARE)

- 1 vCPU

- 2 GB RAM –> bare minimum possible

- 40 GB Disk

- Storage exported via ISCSI

- 4 LUNs with 2 GB each

- 2 LUNs with 30 GB each

Operating system configuration

- Oracle Enterprise Linux 5.3 x86_64 (Kernel 2.6.18-128.el5)

- Installed packages: default system + development packages

Grid Infrastructure configuration:

- Cluster Name: “RAC”

- Binary installation on local disk

- OCR, Voting and datafiles stored in ASM

Steps to install Oracle 11g Release 2 Grid Infrastructure

- Configure Linux and pre-requirements

- Configure Storage

- Binary installation of grid infrastructure

- Installation of Oracle 11g Release 2 Database (either single or rac installation)

Configure Linux and pre-requirements

SWAP

- Between 1 and 2 GB RAM –>SWAP 1.5 times the size of RAM

- Between 2 and 16 GB RAM –> equal to size of RA

- > 16 GB RAM –> 16 GB SWAP

Memory

- according to grid infrastructure documentation “>= 1 GB Memory”

- bare minumum from authors experience:

- 1 GB for grid infrastructure components

- 500 MB for operating system

- 1 GB for cluster database SGA/PGA/UGA

- = 2,5 GB bare minimum!

See below for memory consumption with grid infrastructure installed: > 800 MB for infrastructure processes

Automatic Memory Management

Required /dev/shm with appropriate size (i.e. SGA of 16 GB required /dev/shm to be 16 GB+)

Huge Pages and autom. Memory Management are INCOMPATIBLE

Checking required packages

(see required packages for single database installation; this applies here as well cause we will end up install a database in the end)

According to the documentation the following packages are needed:

- binutils-2.17.50.0.6

- compat-libstdc++-33-3.2.3

- compat-libstdc++-33-3.2.3 (32 bit)

- elfutils-libelf-0.125

- elfutils-libelf-devel-0.125

- gcc-4.1.2, gcc-c++-4.1.2

- glibc-2.5-24, glibc-2.5-24 (32 bit)

- glibc-common-2.5

- glibc-devel-2.5

- glibc-devel-2.5 (32 bit)

- glibc-headers-2.5

- ksh-20060214

- libaio-0.3.106

- libaio-0.3.106 (32 bit)

- libaio-devel-0.3.106

- libaio-devel-0.3.106 (32 bit)

- libgcc-4.1.2, libgcc-4.1.2 (32 bit)

- libstdc++-4.1.2

- libstdc++-4.1.2 (32 bit)

- libstdc++-devel 4.1.2

- make-3.81

- sysstat-7.0.2

- unixODBC-2.2.11

- unixODBC-2.2.11 (32 bit)

- unixODBC-devel-2.2.11

- unixODBC-devel-2.2.11 (32 bit)

On sample system with OEL 5.3 and default + development packages installed only the following rpms were missing:

rpm -ihv libaio-devel-0.3.106-3.2.* libstdc++43-devel-4.3.2-7.el5.* sysstat-7.0.2-3.el5.x86_64.rpm unixODBC-2.2.11-7.1.* unixODBC-devel-2.2.11-7.1.*

Shell Limits

/etc/security/limits.conf

grid soft nproc 16384

grid hard nproc 16384

grid soft nofile 65536

grid hard nofile 65536

grid soft stack 10240

grid hard stack 10240

In /etc/pam.d/login add if not exists

session required pam_limits.so

Kernel Limits (MINIMUM values) in /etc/sysctl.conf

kernel.sem=250 32000 100 128

kernel.shmall=2097152

kernel.shmmax=536870912

kernel.shmmni=4096

fs.file-max=6815744

fs.aio-max-nr=1048576

net.ipv4.ip_local_port_range=9000 65500

net.core.rmem_default=262144

net.core.rmem_max=4194304

net.core.wmem_default=262144

net.core.wmem_max=1048576

– SuSE only –

vm.hugetlb_shm_group=<gid of osdba group>

The values in /etc/sysctl.conf should be tuned (i.e. according to the number of instance, available memory, number of connections,…)

Kernel Limits on Linux (Calculate them)

kernel.sem

semmns = Total number of semaphores systemwide =

2 * sum (process parameters of all database instances on the system)

+ overhead for background processes

+ system and other application requirements

semmsl = total semaphoren for each set

semmni = total semaphore sets = semmns divided by semmsl, rounded UP to nearest multiple to 1024

kernel.sem = <semmsl semmns semopm semmni>

semmsl = set to 256

semmns = set total number of semaphoren (see above!)

semopm = 100; in documentation not explicitly described

semmni = see calculcation above

kernel.shmall

kernel.shmall = This parameter sets the total amount of shared memory pages that can be used system wide. Hence, SHMALL should always be at least ceil(shmmax/PAGE_SIZE). PAGE_SIZE is usually 4096 bytes unless you use Big Pages or Huge Pages which supports the configuration of larger memory pages. (quoted from: www.puschitz.com/TuningLinuxForOracle.shtml)

kernel.shmmax

kernel.shmmax = the maximum size of a single shared memory segment in bytes that

a linux process can allocate

If not set properly database startup can fail with:

ORA-27123: unable to attach to shared memory segment

kernel.shmmni

kernel.shmmni = system wide number of shared memory segments; Oracle recommendation for 11g Release 1 “at least to 4096”; i did not found anything for Release 2….

fs.file-max

fs.file-max = maximum number of open files system-wide; must be at least �%G„�%@6815744�$(B!H�(B

fs.aio-max-nr

fs.aio-max-nr = concurrent outstanding i/o requests; must be set to �%G„�%@1048576�$(B!H�(B

net.ipv4.ip_local_port_range

net.ipv4.ip_local_port_range = mimimum and maximum ports for use; must be set to minimal “9000” and “65500” as maximum

net.core.rmem_default

net.core.rmem_default = the default size in bytes of the receive buffer; must be set at least to “262144”

net.core.rmem_max

net.core.rmem_max = the maximum size in bytes of the receive buffer; must be set at least to “@4194304”

net.core.wmem_default

net.core.wmem_default = the default size in bytes of the send buffer; must be set at least to “262144”

net.core.wmem_max

net.core.wmem_max = the maximum size in bytes of the send buffer; must be set at least to “1048576”

Networking

Basic facts

- Works completely different than 10g or 11g R1!

- At least two separated networks (public and private) and therefore two network interfaces required

- ATTENTION: Interface names must be equal on ALL nodes! (i.e. If private network interface on node A is eth2 the private network interface name on all other nodes must be eth2 as well…. )

- Recommendation: Use bonding for:

- Static naming (even if you use only one interface per bond)

- Failover / Load Sharing

- –> we will use network bonding with only one interface in the following

- IP adresses can be given by two schemes:

- GNS (grid naming service) –> automatic ip numbering

- Manual Mode

- –> we will use manual ip adressing mode in the following

- GNS mode requires:

- one fixed public IP for each node

- one dhcp virtual IP for each node

- one hdcp for fixed private IP for each node

- three dhcp IP for the SCAN

- Thougths by the author:

- new

- more complex

- if working quite easy adding of an node; at least from the ip numbering point of view �%G–�%@ but how often do you add a node?

- Manual Mode ip adressing requires:

- one public IP for each node

- one virtual IP for each node

- one private IP for each node

- one to three (recommended) IPs for providing the SCAN name

Naming schema used in the following (remember: 2-node-cluster)

Configure Network Bonding

In /etc/modprobe.conf add line:

alias bond0 bonding

alias bond1 bonding

options bonding miimon=100 mode=1 max-bonds=2

(“mode=1” means active/passive failover… see “bonding.txt” in kernel sources for more options)

/etc/sysconfig/network-scripts/ifcfg-bond0 looks like:

DEVICE=bond0

BOOTPROTO=none

ONBOOT=yes

NETWORK=192.168.180.0

NETMASK=255.255.255.0

IPADDR=192.168.180.10

USERCTL=no

/etc/sysconfig/network-scripts/ifcfg-eth0 looks like:

DEVICE=eth0

BOOTPROTO=none

ONBOOT=yes

MASTER=bond0

SLAVE=yes

USERCTL=yes

(Note: Add a second interface to achive real fault tolerance…. for our testing environment we use bonding to provide a consistent name schema)

The configuration for bond1 is not shown… just alter interface names and IPs.

Configure NTP

Grid Infrastructure provides ntp-like time synchronization with “ctss” (cluster time synchronization service) ctssd is provided in case connections to ntp servers are not possible

If no running (“chkconfig ntpd off” and configured “rm /etc/ntp.conf” ntpd is found ctssd will be used; if ntpd is found ctssd will start in observer mode.

ATTENTION: Set the “-x” flag if you use ntp to prevent ntp from stepping the clock in /etc/sysconfig/ntpd!

Check if NTP is working

- start “ntpq”

- enter “opeer” to see list of all peers

In our example two peers: host “nb-next-egner” and the local clock

enter “as” to see associations

“sys.peer” means the clock is synchronized against this; the order in which the entries apper is like “opeer” – so first entry means host “nb-next-egner” – fine!

reject means not synchronized against due to various reasons

enter “rv” for detailed information

SCAN

- SCAN = Single Client Access Name; new concept in 11g R2

- DNS-based

- nameing notation: <name of cluster>-scan.<domain>

- for our cluster named “rac” with domain “regner.de” this is rac-scan.regner.de

- You need at least ONE – better three IPs for the new database access schema called SCAN

- IPs are configured in DNS (forward and reverse lookup);

- !! using local hosts file failed verification after grid installation !!

- forward- and reverse lookup needs to be configured

- excerpt from zone file:

rac-scan IN A 192.168.180.6

rac-scan IN A 192.168.180.7

rac-scan IN A 192.168.180.8

After installation we will find three listeners running from grid infrastructure home:

bash# srvctl status scan_listener

SCAN Listener LISTENER_SCAN1 is enabled

SCAN listener LISTENER_SCAN1 is running on node rac1

SCAN Listener LISTENER_SCAN2 is enabled

SCAN listener LISTENER_SCAN2 is running on node rac2

SCAN Listener LISTENER_SCAN3 is enabled

SCAN listener LISTENER_SCAN3 is running on node rac2

Connection to database “RAC11P” using SCAN would use this tnsnames entry:

RAC11P =

(DESCRIPTION=

(ADDRESS=(PROTOCOL=tcp)(HOST=rac-scan.regner.de)(PORT=1521))

(CONNECT_DATA=(SERVICE_NAME=RAC11P))

)

The “old fashioned” way still works:

RAC11P_old =

(DESCRIPTION=

(ADDRESS_LIST=

(ADDRESS=(PROTOCOL=tcp)(HOST=rac1-vip.regner.de)(PORT=1521))

(ADDRESS=(PROTOCOL=tcp)(HOST=rac2-vip.regner.de)(PORT=1521))

)

(CONNECT_DATA=(SERVICE_NAME=RAC11P))

)

Connecting to a named instance:

RAC11P =

(DESCRIPTION=

(ADDRESS=(PROTOCOL=tcp)(HOST=rac-scan.regner.de)(PORT=1521))

(CONNECT_DATA=(SERVICE_NAME=RAC11P)

(INSTANCE_NAME=RAC11P1))

)

Check DNS for SCAN

Update [16th October 2009]: If you do not have a working DNS server available refer here to set up your own,

Forward lookup

Use “dig” to check: “dig rac-scan.regner.de”

Reverse lookup

Use “dig -x” to check

dig -x 192.168.180.6

dig -x 192.168.180.7

dig -x 192.168.180.8

Create User and Group

Create Group

groupadd -g 500 dba

Note: For educational purposes we use only one group. In productive enviroments there should be more groups to separate administrative duties.

Create User

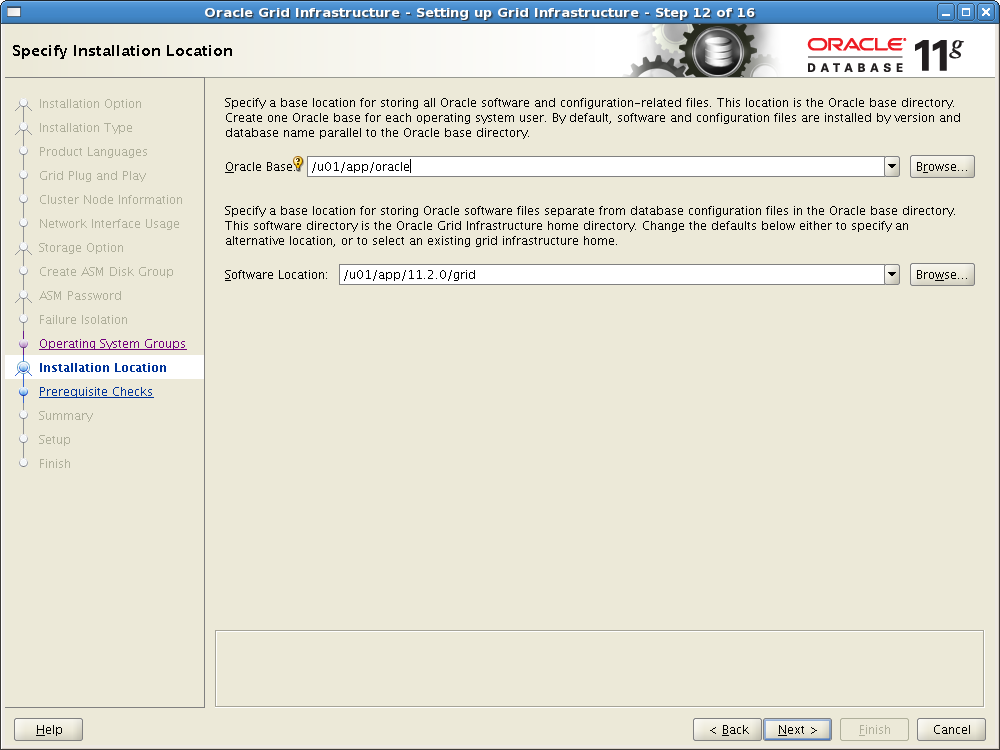

mkdir -p /u01/app/11.2.0/grid

chown -R root:dba /u01

chmod -R 775 /u01

chown -R grid:dba /u01/app/11.2.0/grid

useradd -g dba -u 500 -d /u01/app/11.2.0/grid grid

passwd grid

Note: Oracle recommends different users for grid and database installation!

Make sure groupid and userid are the same on ALL nodes!

Create profile file (~/.bash_profile or ~/.profile on SuSE) for user “grid”

umask 022

if [ -t 0 ]; then

stty intr ^C

fi

Prepare and Configure Storage

- Requirements

- must be visible on all nodes

- as always – recommendation: SAME (stripe and mirror everything)

- What to store where:

- OCR and Voting disk

- ASM

- NFS

- RAW disks (deprecated; read doucmentation!)

- Oracle Clusterware binaries

- Oracle RAC binaries

- Oracle database files

- Oracle recovery files

Install RPMs

- oracleasmsupport

- oracleasmlib

- oracleasm-<kernel-version>

(see “Sources” for download locatio)

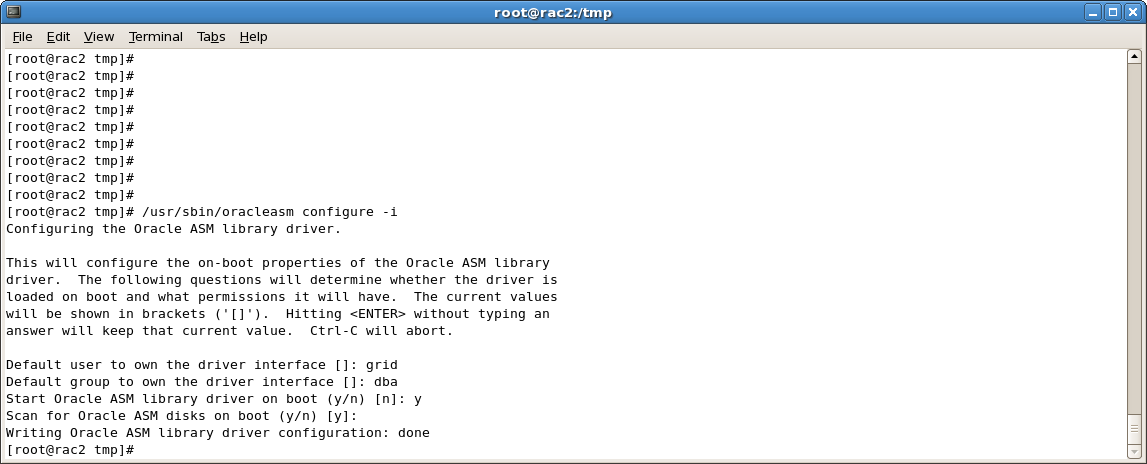

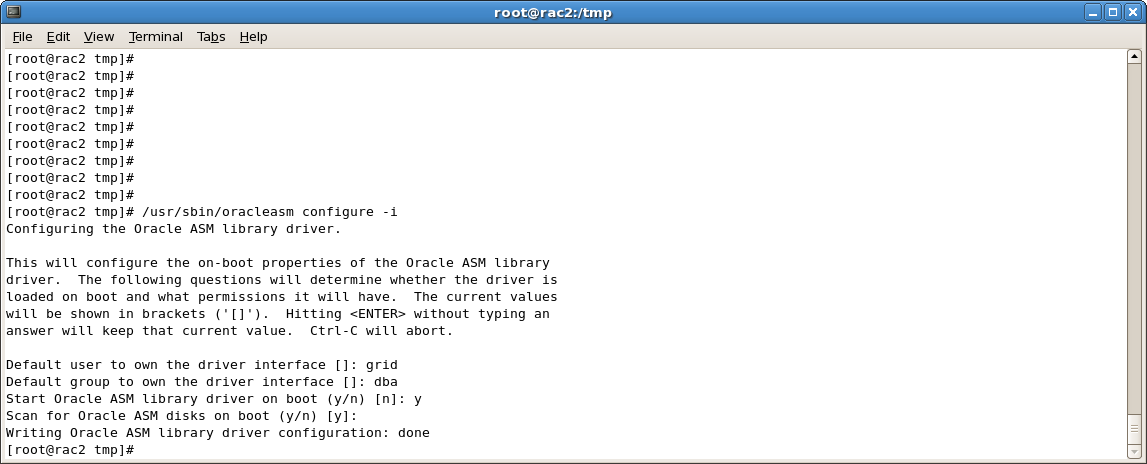

Configure ASM

/usr/sbin/oracleasm configure -i

init ASM

/usr/sbin/oracleasm init

Create Partitions on disk with fdisk

In the following example disk /dev/sde (this is our iSCSI storage) does not contain a partition at all – we will create one

Create one whole disk partition on /dev/sde

Label all disks with asm label

Query disks on all nodes – Node “rac1”

–> all disks visible with correct label

Query disks on all nodes – Node “rac2” (the other node)

–> also all four LUNs visible

OCR and Voting disks

- Will be placed in ASM (new in 11g R2)

- three different redundancy levels:

- External – 1 disk minimum needed

- Normal – 3 disks minumum needed

- High – 5 disks minimum needed

- Storage Requirments

- External – 280 MB OCR + 280 MB Voting Disk

- Normal – 560 MB OCR + 840 MB Voting Disk

- High – 840 MB OCR + 1,4 GB Voting Disk

- plus Overhead for ASM Metadata

Overhead for ASM metadata

total =

[2 * ausize * disks]

+ [redundancy * (ausize * (nodes * (clients + 1) + 30) + (64 * nodes) + 533)]

redundancy = Number of mirrors: external = 1, normal = 2, high = 3.

ausize = Metadata AU size in megabytes.

nodes = Number of nodes in cluster.

clients – Number of database instances for each node.

disks – Number of disks in disk group.

For example, for a four-node Oracle RAC installation, using three disks in a normal redundancy disk group, you require 1684 MB of space for ASM metadata

[2 * 1 * 3]

+ [2 * (1 * (4 * (4 + 1)+ 30)+ (64 * 4)+ 533)]

= 1684 MB

OCR and Voting disks – recommendations

- use high redundancy for OCR and Voting disks – the correct function of your cluster depends on it!

- use 5 disks with 10 GB each – enough space for all files plus asm metadata plus space for futher growth

Checklist

- Storage visible

- user and groups created

- Kernel parameters configured

- RPM Packages checked / installed

- NTP working

- DNS working

- Connection (ping, ssh) between nodes working?

- Backup available for rollback?

–> Alright! Lets start binary installation

Installing

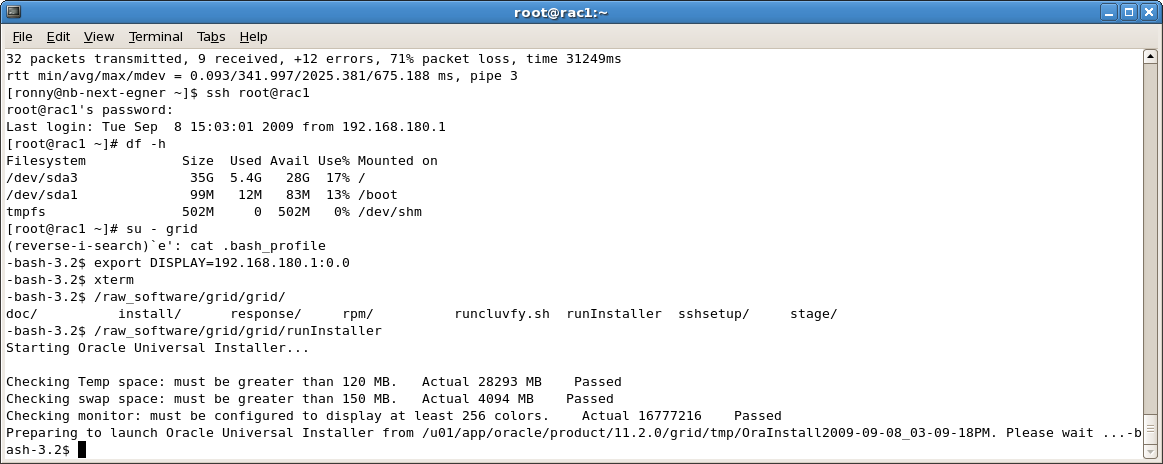

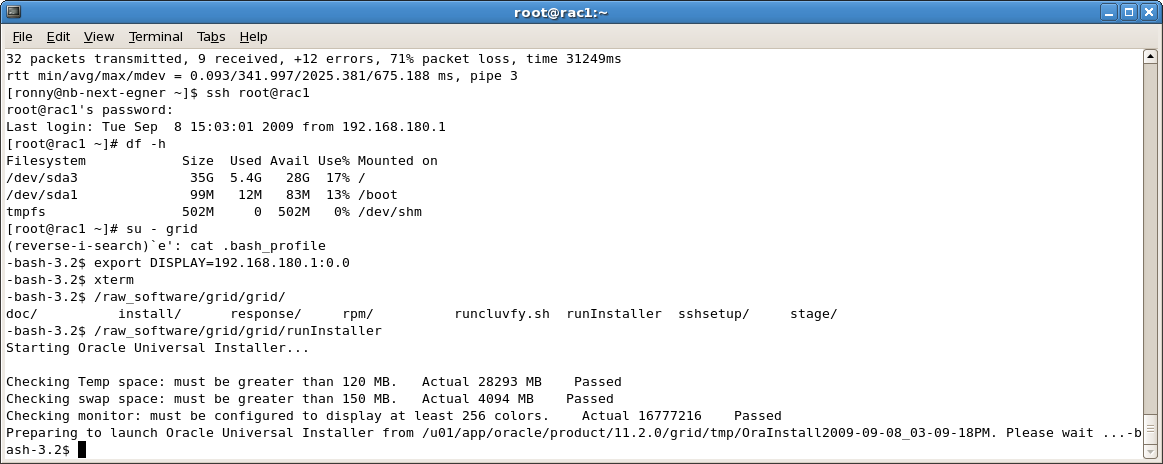

Start installation as user “grid” (on one node (here on node “rac1”))

Remember: We choose not to use GNS; so it is deselected

The node the installer was started is already added by default; add here all other nodes (in our case we added “rac2”)

Click on “SSH Connectivity”, enter username and password and click on “Setup”

If everything worked the following message appears

If there are problems check:

- Group ID and User ID on both nodes

- Connectivity between both nodes

- Passwords

Select which interface is the public and which the private one

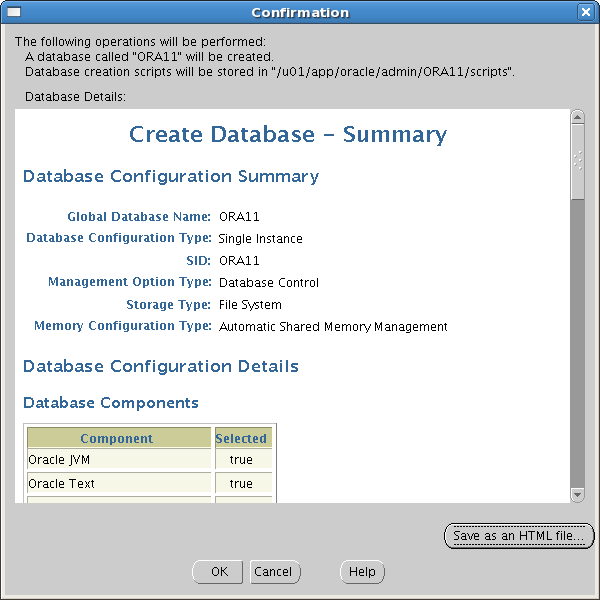

Where to place OCR and Voting disk… in our case we use ASM for everything

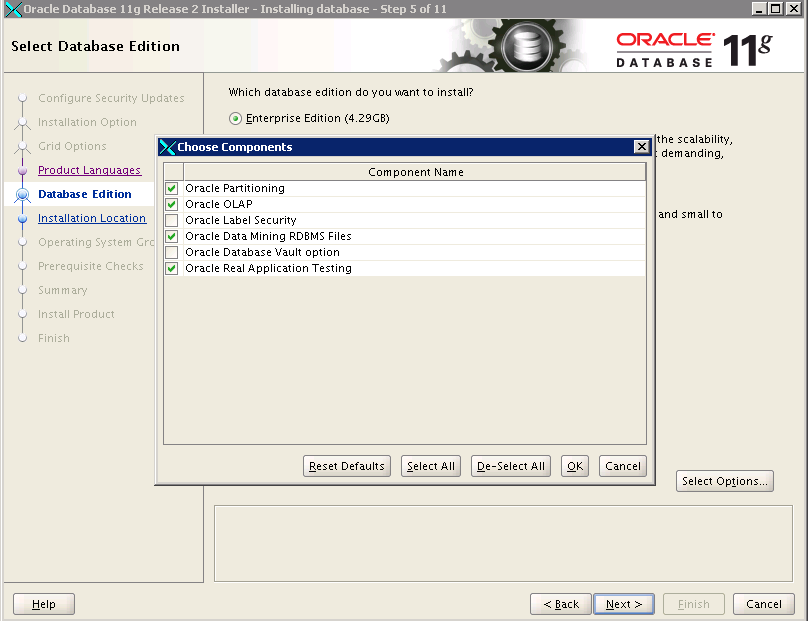

For storing OCR and Voting disk we need to create a data group; our first data group is called “DATA1” and consists of the four LUNs we prepared and labeled before… here we see the disk names we labeled the disks with again. We choose “normal” redundancy which will create a mirror.

Specify passwords for ASM and ASMSNMP.. choose strong passwords if possible (i was lazy and chose not that strong ones – acceptable for educational purposes but not in real productive scenarios)

Grid Infrastructure can use IPMI for fencing… VMWARE does not have IPMI

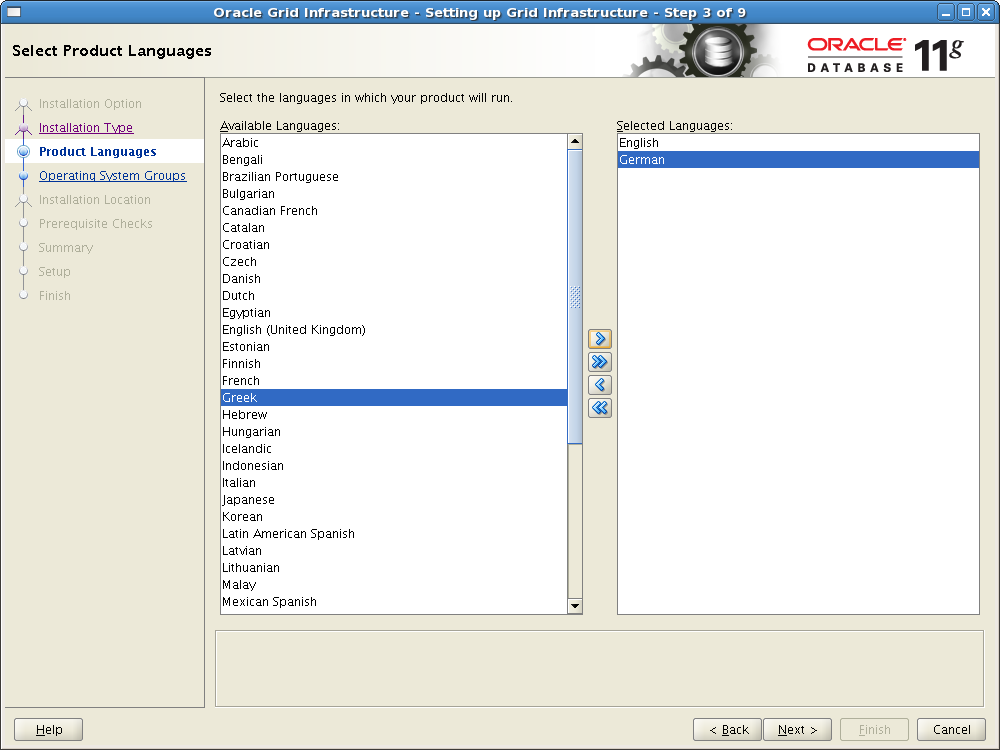

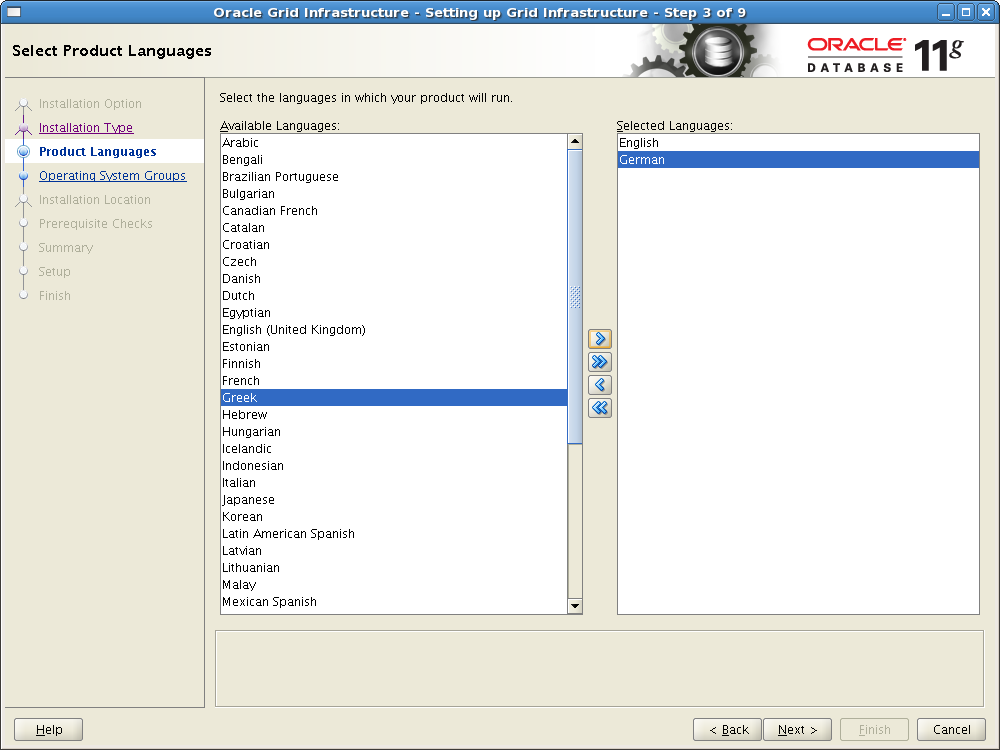

group mapping…for role separation… we have only “dba” ? change accordingly to your needs

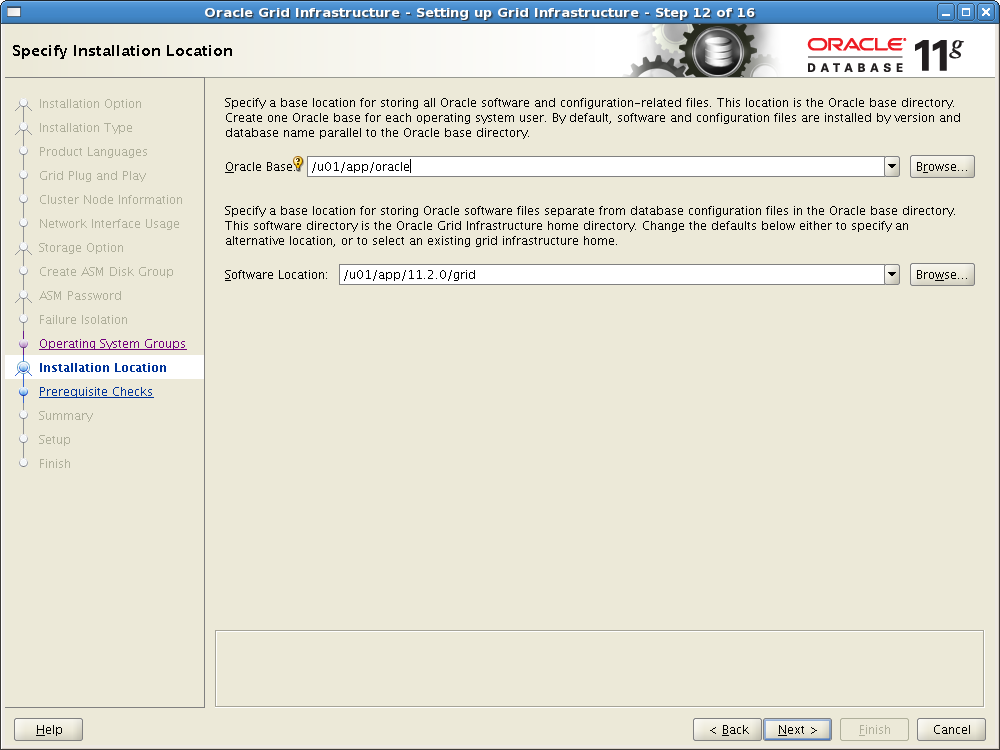

Set Oracle Base and software (install) location… software location must not be under oracle base location… else the installer throws an error saying so

Inventoriy location…

Make sure you fix every issue reported here (memory and swap size are limited on virtual machine so this is not fixable…but should anyway)

Ready..

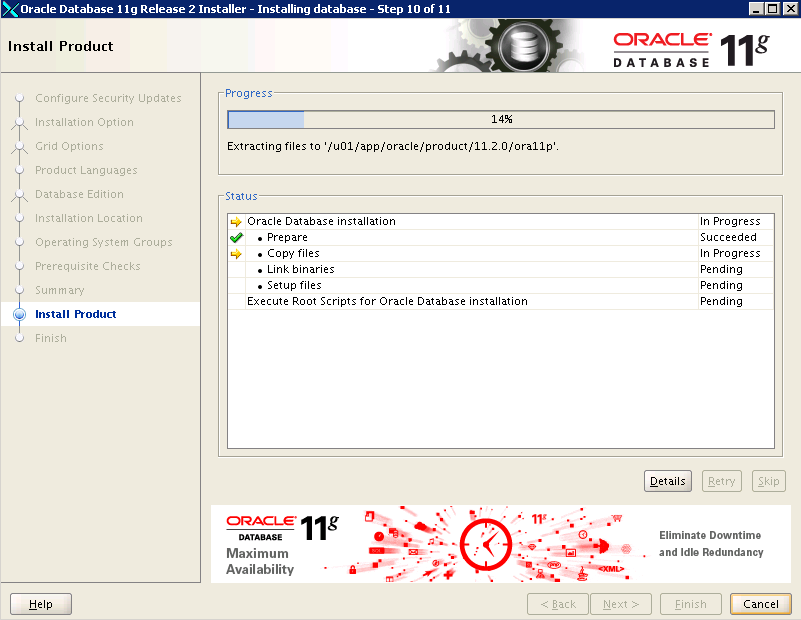

Installing…

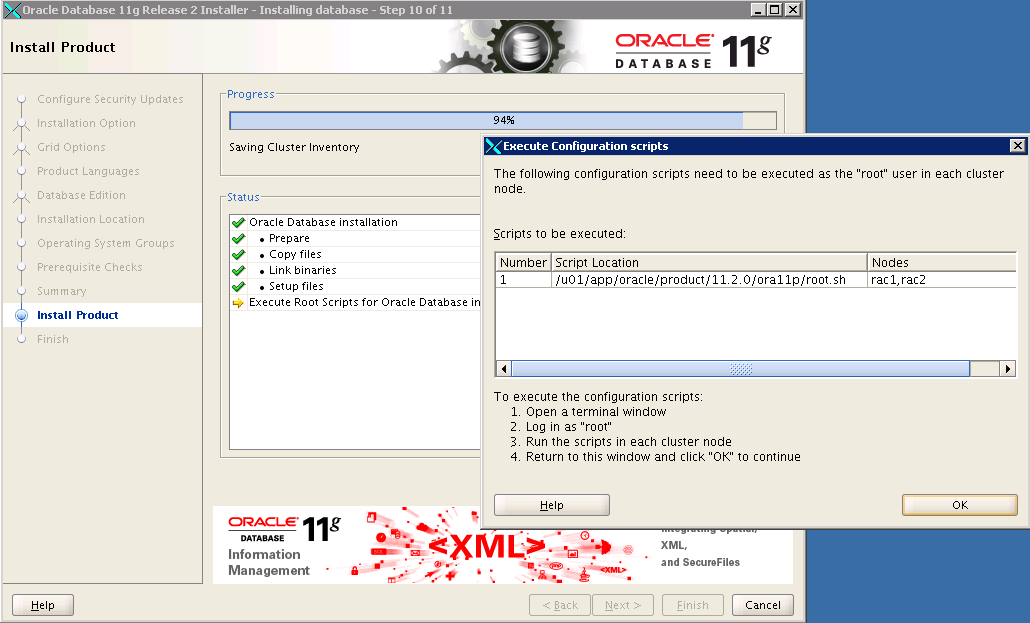

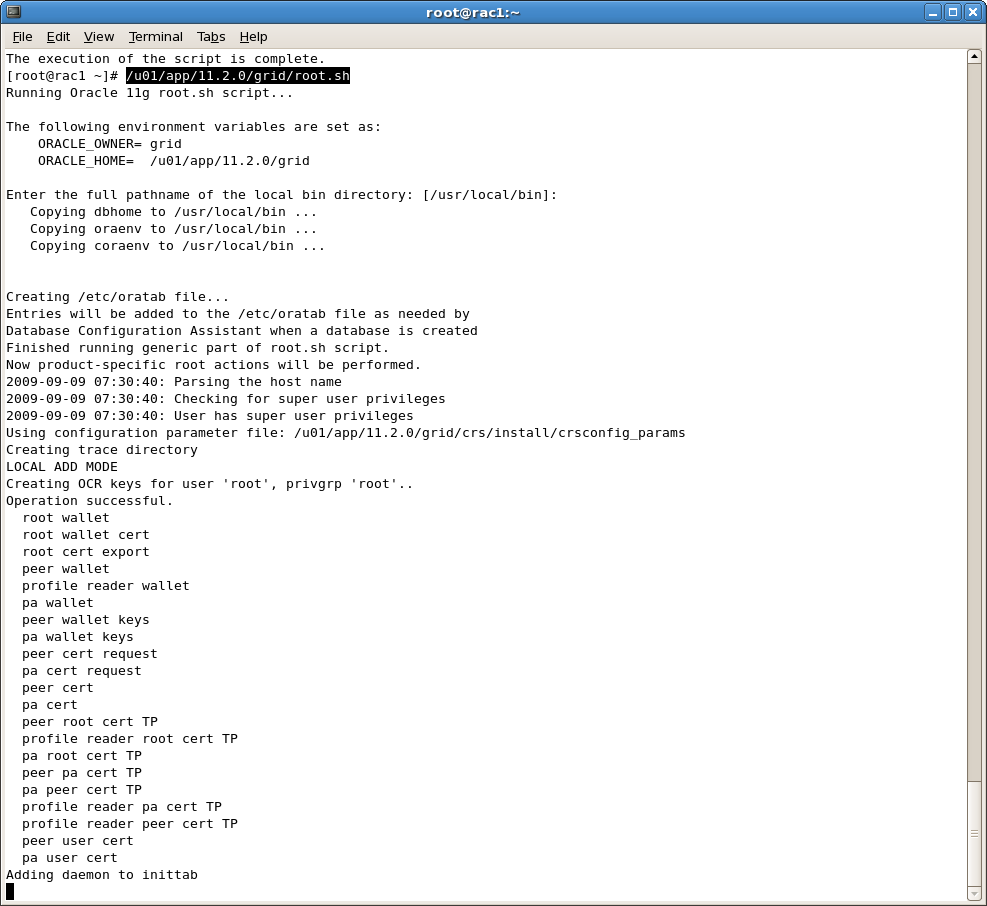

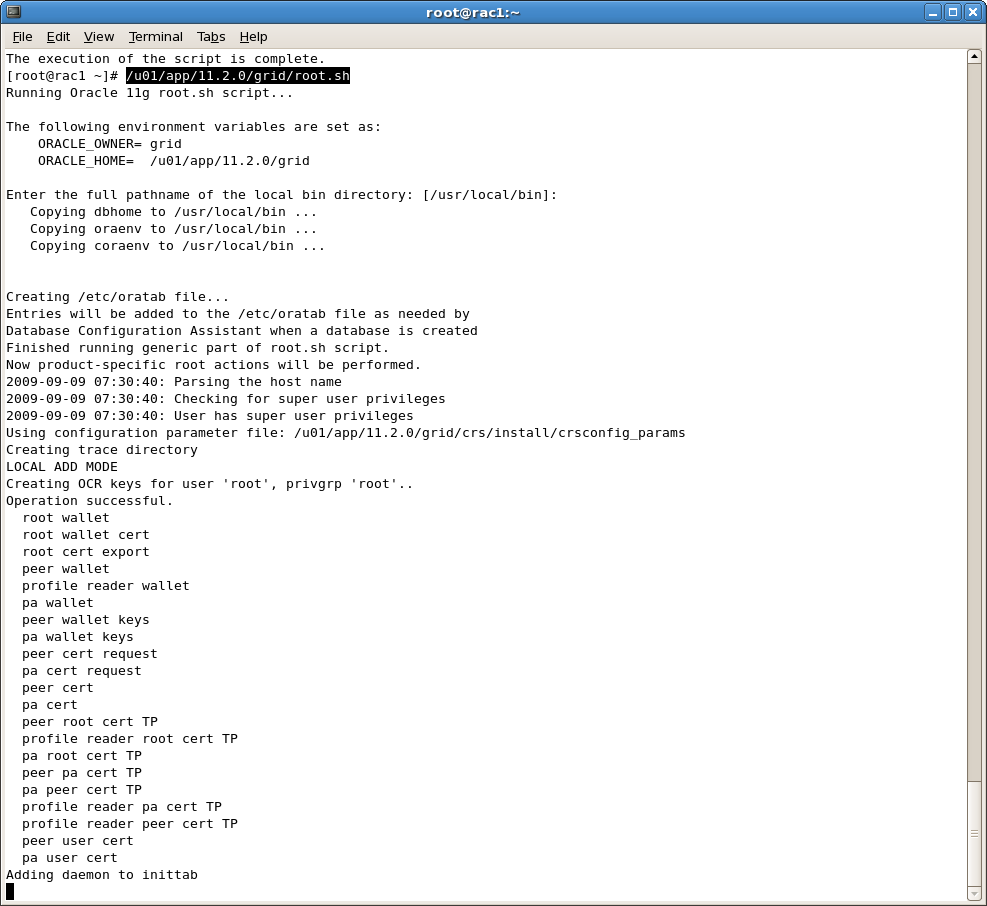

Post-Installation scripts to be started in the following order:

- orainstRoot.sh on node rac1

- orainstRoot.sh on node rac2

- root.sh on node rac1

- root.sh on node rac2

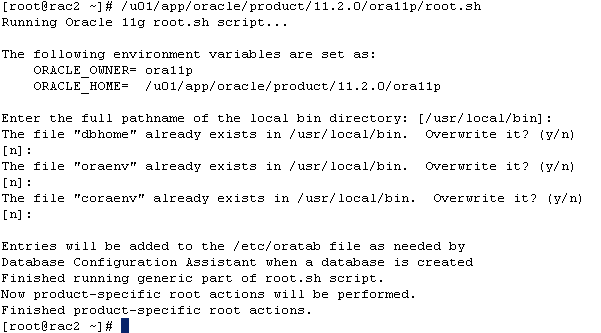

Sample of root.sh output

The full output can be found rac-install-node1 and rac-install-node2.

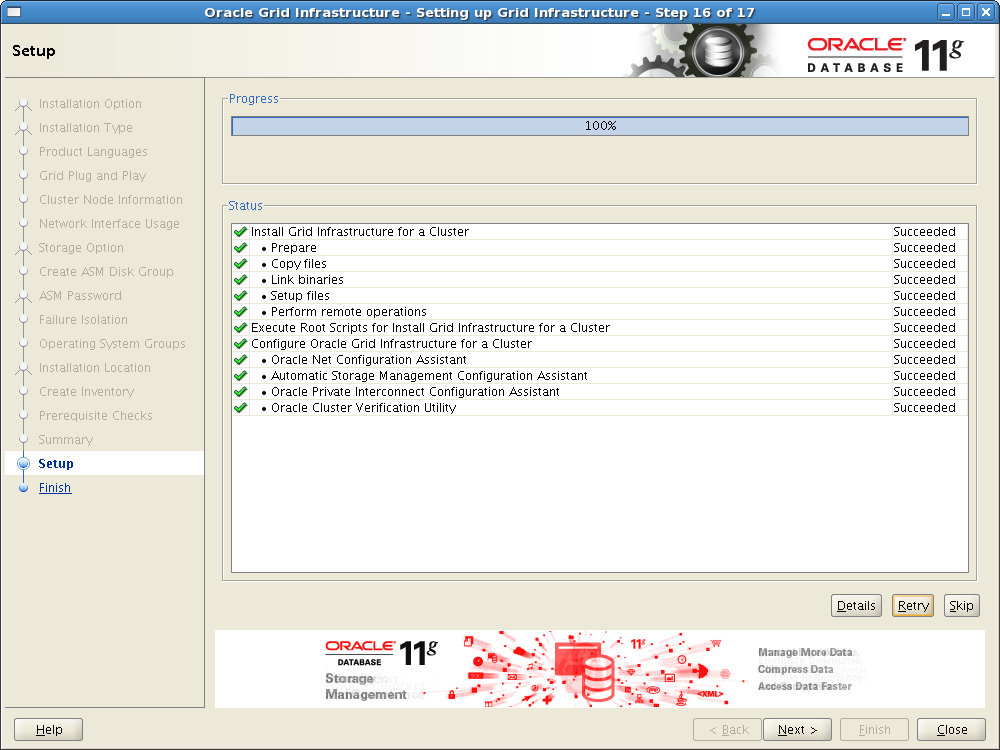

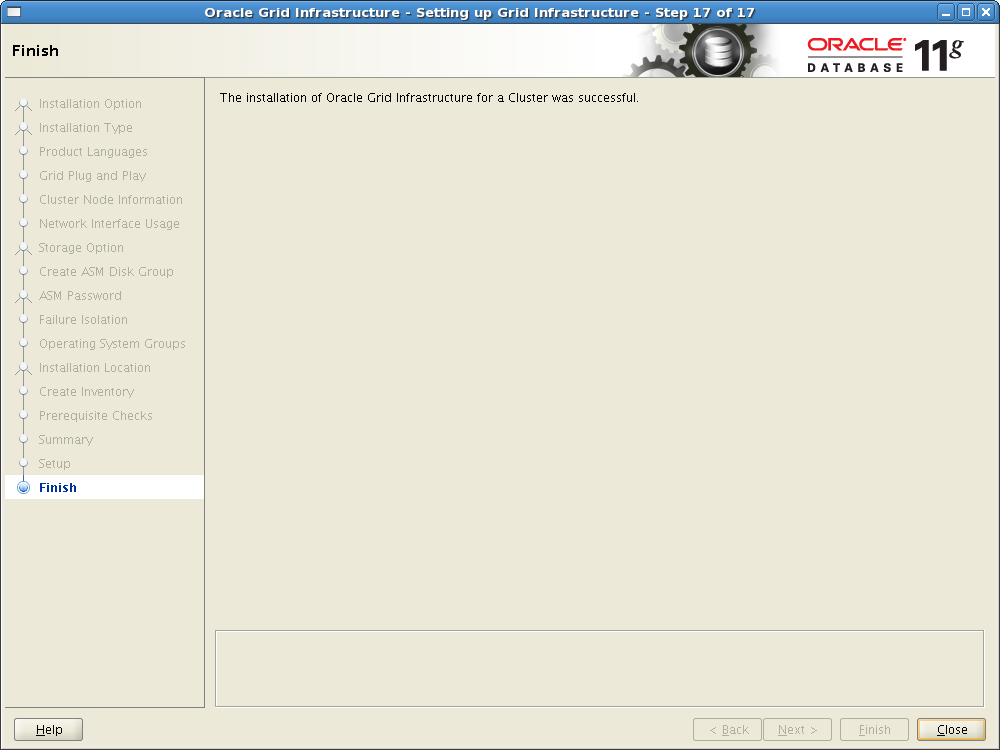

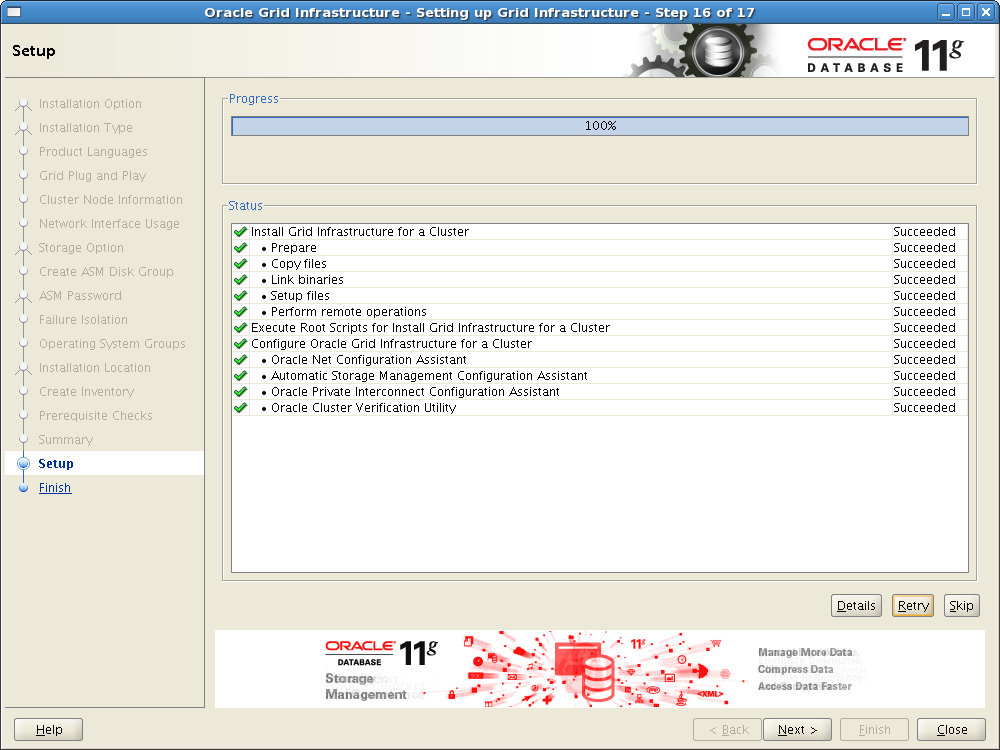

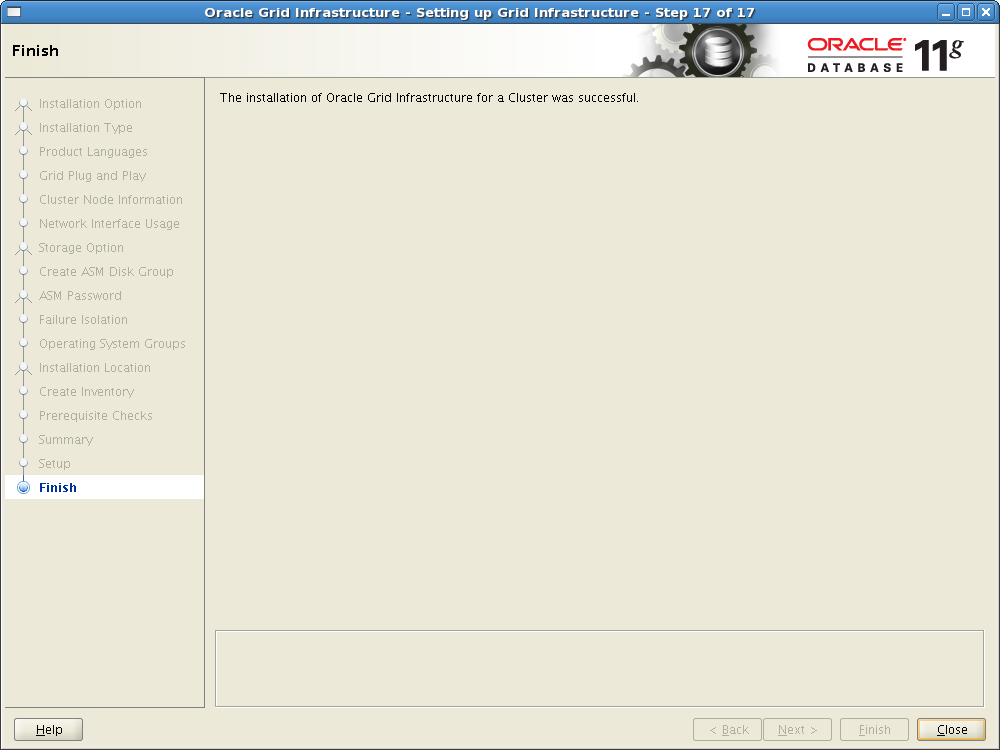

If everything works as expected the result should look like this:

FINISHED

If there are problems:

- Look at the log files located on /u01/app/oraInventory/logs

- Fix the issues noted here

- If this does not work out: Search Metalink / Open SR

Where to go now?

- We just installed the Infrastructure needed for RAC, i.e. ASM and Clusterware

- Install diagnostic utilities (strongly recommended)

- Tune Kernel parameters (if not done before)

- Create at least TWO more disk groups:

- one for holding database files (i.e. datafiles and binary installation files)

- one to be used as flashback recovery area

- Backup current configuration

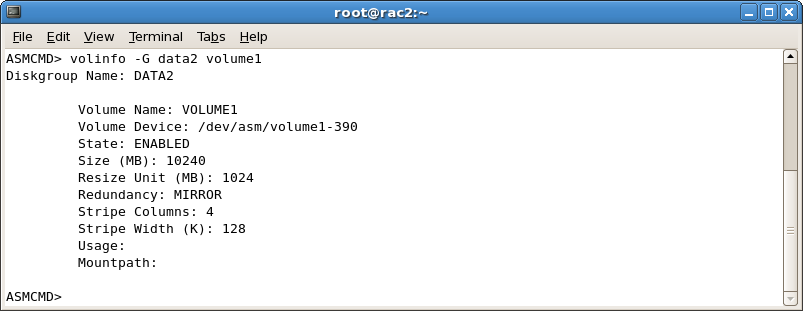

- some ADVM (ASM Dynamic Volume Manager) and ACFS (ASM Cluster File system) foundations can be found here

- now we need to install a RAC database – this is covered here and here